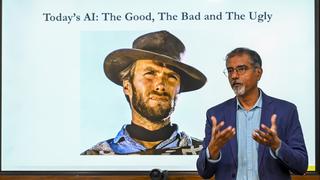

Balaji Padmanabhan, director, Center for Artificial Intelligence in Business, Robert H. Smith School of Business, University of Maryland, speaking at a discussion held on AI.

| Photo Credit: SRINATH M.

Artificial Intelligence may appear magical today, but it is grounded in decades of evolving logic and computation, said Balaji Padmanabhan, director, Center for Artificial Intelligence in Business, Robert H. Smith School of Business, University of Maryland.

He was speaking at a discussion hosted by The Hindu on the growing influence and integration of AI across domains.

Beginning with the earliest chatbots of the 1960s, Prof. Padmanabhan spoke about how definitions of intelligence have evolved. Human intelligence, he said, is shaped by memory and experience, while AI systems learn from data and patterns.

“Everything in AI is a number,” he said, explaining how the concept of embedding allows machines to convert even abstract ideas into computable forms. Learning in AI happens through sequences of inputs and outputs, generating patterns and predictions at scale.

Referencing AlphaGo — Google DeepMind’s system that defeated human champions in the board game Go, he elaborated reinforcement learning, where machines learn through trial, error, and feedback, though such systems still rely heavily on human-led design and training.

He identified education, healthcare, and the military as major sectors where AI is gaining ground. The discussion also referred to Alpha, a school in Austin that uses AI to personalise learning.

Responding to a question on AI regulation, Prof. Padmanabhan pointed out that even conventional teaching lacked defined guardrails and AI was no different.

The discussion included questions on lateral thinking, embedded archives, AI in sports, games, and pain analysis.

Emerging threat

He also flagged prompt injection attacks as an emerging threat, where manipulated inputs could lead AI systems — especially large language models — into unintended or unsafe behaviour.

On broader implications, he noted that reliance on AI may reshape human memory and cognition over time.

Addressing governance, he emphasised the need for strong verification architecture, fair use policies, future-oriented planning, and compliance frameworks. “We need to govern systems, not just AI,” he said.

Published – August 01, 2025 12:46 am IST

Leave a Reply